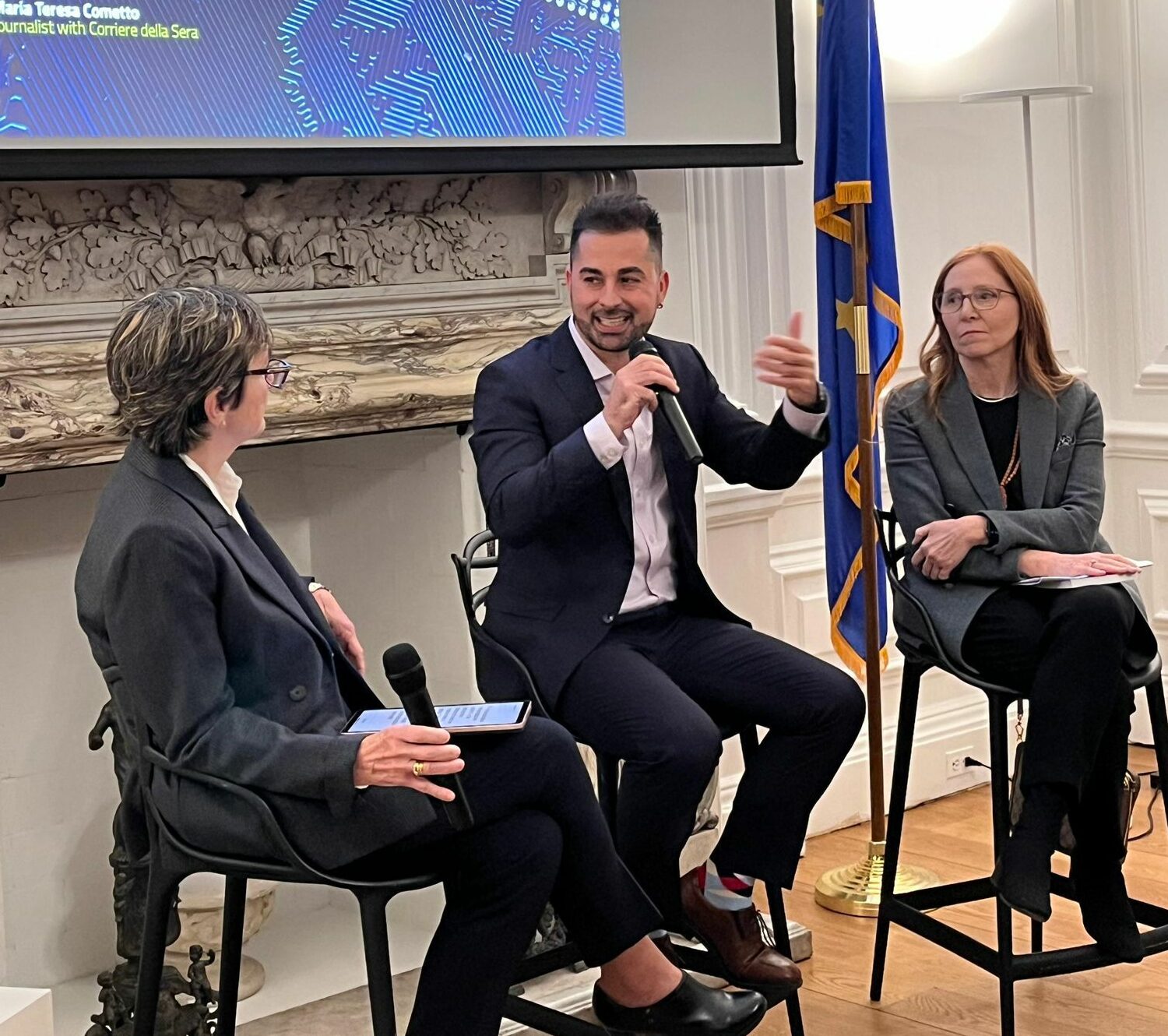

Last January 23, the Italian Consulate in New York hosted an event dedicated to the theme “Artificial Intelligence and Society,” which featured Francesca Rossi and Enrico Santus, two leading AI experts working at IBM and Bloomberg, respectively. Moderating the conversation was Maria Teresa Cometto, a journalist from Corriere della Sera, who pointed out how New York has become the second AI hub in the United States, after California.

Contributing to the city’s attractiveness for companies in the sector is the large presence of major industries-from financial services to law firms to healthcare-all of which now require artificial intelligence-based solutions.

More and more often we hear about AI and its potential in every field, but what made the event particularly interesting was the humanistic imprint offered by the guests, who were able to focus attention not only on the extraordinary technological advances, but also on the ethical and social implications.

Francesca Rossi, who serves as AI Ethics Global Leader at IBM, has a background in computer science and artificial intelligence, but has since dedicated herself to AI Ethics. “It is a multidisciplinary field where people with technical expertise meet with policymakers and other AI stakeholders to understand, identify and mitigate problems and risks, and find solutions,” Rossi explains. At IBM, work on AI ethics ranges from high-level principles on how the company wants to operate and how it intends its technology to be used by customers, to concrete actions in the day-to-day: trainings to understand values, equity, sustainability, transparency, privacy, employment impact and more; software guidelines and tools for developers; and internal processes to assess possible risks of use cases intended for customers. Externally, Rossi maintains collaborations with various public and private entities around the world, including a fruitful partnership with the Vatican established in 2020 as part of the “Rome Call for AI Ethics” document, of which IBM was among the first signatories.

Enrico Santus, meanwhile, at Bloomberg serves as Principal Technical Strategist for Human-AI Interaction (HAI) in the CTO’s office. “Human-AI Interaction aims to improve the interface between humans and AI in order to achieve collaborative intelligence capable of producing superior results than each party can do alone,” Santus explains. A prime example is Large Language Models (LLMs), whose popularity stems in large part from their ease of use: people can interact with them directly in natural language, without being machine learning experts or specialized engineers. “This is an area of great interest for HAI, bringing together principles from different disciplines and even including ‘prompt engineering’ activities,” Santus notes.

Within Bloomberg, HAI thus becomes central to the company’s mission: as a leader in the global financial industry, the company needs to facilitate exchanges between domain experts and AI during the training phase, improve the user experience for customers, and ensure evaluation and transparency of model performance so as to nurture user confidence. “It’s about taking care of every phase-from training to deployment to use, evaluation and interpretation-just as if we were designing not only the engine of a vehicle, but also its dashboard: steering wheel, pedals, gearbox. In this way, drivers can get to their destination easily and safely.”

Francesca Rossi has published in Italy her second book, “Artificial Intelligence. How it works and where the technology that is transforming the world is taking us,” designed for a broad audience that is not necessarily tech-savvy. The text provides a clear and balanced picture of the current state of AI: its history, how we got here, its most promising applications, and the risks involved (from discrimination to its impact on jobs and education).

In addition, the book addresses the ethical aspect, pointing to measures that individuals, companies and governments can put in place to prevent negative consequences. Accompanied by contributions from experts in various fields (including Daniel Kahneman), it offers reflections on how AI can integrate with human capabilities without necessarily “surpassing” them in terms of intelligence, emphasizing instead the possibility of synergistic collaboration between humans and machines.

Enrico Santus also leans toward the idea of “collaborative intelligence,” pointing out that humans and machines have different strengths and weaknesses, the balancing of which can produce far superior results than an approach in which we rely only on one or the other.

Santus then points out how “the most common misconception about AI is that it is really ‘smart.'” LLMs excel at generating coherent text and mimicking human conversations, but often fail at complex tasks, creating nonexistent information or giving inconsistent answers. In a recent article, Gary Marcus said we are still a long way from so-called AGI (Artificial General Intelligence), but we are approaching what he calls “broad, shallow intelligence”: models that can do many things, but without deep understanding.

Language models can trick and pass simplified versions of the Turing Test, the so-called “imitation game.” Turing proposed it in 1950 or so: a person had to interact with two “hidden” agents and figure out which was the computer and which was the human being. Today, many people might get confused and misattribute, because indeed the generated content is very believable. However, if you interact with these models for a very long time, making pitfalls out of them, you will find that they fail in so many ways, have a variety of limitations. There are riddles that make them fall apart, inconsistencies, “hallucinations,” and so on, and these elements still allow us to distinguish them sharply from a human being. So yes, we are still far from a truly “human” AI.

According to Santus, this is precisely why Human-AI Interaction (HAI) proves to be essential: in order to exploit the full potential of AI systems and ensure their accuracy, reliability and adherence to objectives, human intervention is needed. AI cannot yet operate in complete autonomy, and the specialized contribution of humans-in supervision, interpretation and judgment-proves indispensable.

One particularly topical issue concerns bias (bias) in AI algorithms, a problem that Francesca Rossi, as IBM’s “AI Ethics Global Leader,” faces on a daily basis. “The classic way bias is introduced is through training data,” Rossi explains in fact. “Systems rely on huge amounts of data, which in turn may contain correlations that are not obvious; the AI ‘learns’ them and then uses them to make decisions or make recommendations, producing discrimination on certain groups.”

Many software tools already exist to detect and reduce bias, but according to Rossi, “this is the ‘easy’ part of the job. The real challenge is to train the people who use such tools and improve their awareness of the bias itself.” As an example, he recalls some senior developers who, without proper training on machine learning and bias, believed that by not including “protected” variables (such as race, gender or age) in the models, any risk of bias could be averted. In reality, one must also consider related variables, such as zip code, which often reflects ethnicity and can therefore introduce indirect bias. “Investing in training those who build and use AI is crucial, and is more challenging than installing software. Moreover, even a theoretically bias-free system, if deployed or applied incorrectly, can generate inequities. Think of a model created for the New York City population but then used in a very different demographic context: a bias might emerge that we were not aware of. It is a very complex issue,” Rossi concludes.

Another front of discussion revolves around the possible misuse of AI tools such as ChatGPT, especially in educational settings. Francesca Rossi, in an interview with Corriere della Sera, pointed out that the greatest danger concerns the education sector: “Students cheat, doing their homework with ChatGPT, and this is serious because they do not engage in analyzing data and information, or reworking them critically or creatively. The risk is that the generation accustomed to these technologies will lose the ability to critique and innovate.”

In fact, Rossi points out that when a student is asked to write an essay, the goal is not just to produce a good text, but to enable the student to learn an inner process: to acquire knowledge, compare information, evaluate its relevance, structure an argument and then expound it in a coherent form. “If a tool bypasses this process, the student not only does not learn how to write well, but also how to compare different opinions, how to respect them, how to compromise. Fundamental skills for a constructive adult. So yes, I see a serious danger to the future of society.”

It is precisely on this issue that Enrico Santus speaks, adding:

“Thank you for this important question. There is no single ‘right’ answer, because the risks associated with AI misuse vary depending on the context. AI, like any tool, is neutral in origin: it is our use of it that determines its positive or negative potential. A bit like a knife, which can be used to prepare food or to do harm, AI can improve people’s lives or end up harming them.However, there is a danger that in the long run could become systemic and irreversible: the gradual erosion of critical thinking due to overdependence on technology and the consequent ‘delegation’ of thinking itself.I am not particularly concerned about the use of ChatGPT for homework: these tools will inevitably be part of their world. What concerns me is that we continue to educate and assess students with criteria and methods designed in a pre-AI era, that is, based on what AI can do for them. I believe we should rethink the educational system to prioritize critical thinking, creativity and problem-solving skills. We need to build or redesign educational contexts that foster the acquisition of these skills at a higher level.Beyond this, there is also a responsibility that falls on each of us. With AI set to permeate every aspect of our lives, we must take the time to consciously reflect on how and why we use it. French philosopher Simone Weil said that ‘attention is the purest and rarest form of generosity.’ I believe this statement is even more true today, in an age when overstimulation and time scarcity constantly distract us. Cultivating attention to what we do and want is essential for responsible use of AI and for staying mindful of our actions.”

Turning to the more practical aspects, the question arises: how is AI used in everyday life?

Enrico Santus points out that “virtually every tool we use today integrates AI in some form, whether it’s Spotify for music suggestions, Netflix for TV series, Uber for optimizing routes, or Google for organizing search results: AI is everywhere.” If, on the other hand, we look at generative AI tools-such as ChatGPT-Santus employs them for different tasks: “I have them summarize articles, generate draft reports or posts, and help me formulate more targeted questions. Of course, I have to very carefully set up the input and scrupulously review the output, because it is a collaborative process rather than an automation one. But these tools prove very useful in shortening the time between a first draft and a well-finished final product.”

Ultimately, then, AI is already an integral part of everyone’s life, in sometimes invisible but essential forms, and the prospect of “collaborative intelligence” remains the most promising avenue for harnessing the full potential of this technology, without sacrificing the irreplaceable contribution of the human being.

The article Artificial Intelligence and Society: the views of Francesca Rossi and Enrico Santus comes from TheNewyorker.